For an Artificial Intelligence (AI) to reach a state of perceived intelligence it first has to learn and adapt to its task. These tasks are normally quite specific, and lead to an AI that is far more limited than the artificial general intelligence possessing androids of sci-fi books and films. In the short term at least, there is a lot more to be gained from these niche AIs than in aiming to create something as versatile as a human being. Because of this, there is great interest in the effectiveness and final accuracy of how a machine learns. Within the broad field of AI the word learning is given lots of different prefixes to mean lots of different things, 5 of interest in this post are:

- Supervised learning

- Unsupervised learning

- Semi-supervised learning

- Reinforcement learning

- Deep learning

All of these can be imagined to be within the bubble of machine learning, a term you’ve probably heard before. The main differences in these methods, and why some can be more desirable than others for certain tasks more will be briefly looked at now.

Supervised learning is called as so because the whole learning process of the program is relatively controlled. When a machine like this learns it is fed training data as the input, and in this case the program is also told the desired answer to each piece of data (the output). This way the program creates a function mapping the input to the desired output, which it can then use to predict future outputs when given new input data. All of these machine learning programs are usually fed validation data after the training data, to confirm the working state of the AI. A basic example of supervised learning in use would be giving a program data that represents the attributes of a house, such as the area of floor space, number of bedrooms, and the location of the house, and then also giving the program the outputs of how much each house sold for. The idea is then to give the program relevant data about new houses to find an accurate value for them.

A somewhat more interesting technique is unsupervised learning. Unsupervised learning can spot similar patterns that the former method would when being used for the same task however, in a more abstract way. The main difference between these two approaches is that in unsupervised learning the program isn’t given any output data to match to the given input data, ultimately leading to the program finding patterns and correlations in the data without being explicitly told what to look for. Because of this, unsupervised learning is more so used when the results wanted are not so obvious to the people working with the data. Since this method of learning has less strict instructions and fewer guidelines, it’s seen to be closer to general intelligence than supervised learning. Unsupervised learning is commonly used in things like online shopping recommendations, where after you have bought something you are targeted with advertisements based off of what other users who bought the same product as you also bought after of before.

Semi-supervised learning is as the name suggests, partly supervised and partly not. The data given in this approach is typically a small amount of labelled data (like in supervised learning) and a large amount of unlabelled data (same as in unsupervised learning). Firstly, this helps with a few things, such as reducing bias and error in the data as not all the data is labelled by someone who could have made mistakes or impacted the data in an inaccurate way. Also, not having to label a large majority of the data helps greatly with timing and cost issues too. Semi-supervised learning can be taken advantage of in certain situations to do with organising things into groups, at less cost than with supervised learning. A program could for instance take unlabelled data of pictures of fruit like apples bananas and oranges, and put them into groups based on things like colour and shape. It cannot however, actually state which one is an orange or an apple or a banana, but with a small amount of labelled data it would then be able to recognise out of all of the pictures it has, which ones correspond to which fruit after examining similar pictures that have a name attached to them.

Reinforcement learning is quite different in how it learns compared to the previous three. It uses a reward-based system, often with punishment involved as well, to incentivise the program to perform a task well. When the program is presented with a task, it has an observable environment and the ability to perform certain actions. For certain actions it is rewarded and for others it is punished, usually through a numbered score system, where a positive action would give +1 and a negative -1, or even larger numbers depending on the severity of the consequence of the action. This approach is pretty similar to training your dog to do tricks by giving it treats when it does it right. But a difference between the two is the vast amounts of ways some problems can be approached and proceeded through. Where a dog rolling over is just one action for the dog to perform, and AI playing chess for instance is faced with a lot of different actions that each lead to a new set of different actions, and so on until the option pathways aren’t really comprehendible for a person due to all the different moves the opponent can make on top of that. Thankfully though these types of chess playing programs can play millions of games in a couple hours during their learning phases, so this kind of brute force computing power means it does not encounter problems when faced with the vast number of possible options. Something that could seem to be a problem however, is if a path of actions that seems bad at first, giving punishment to the AI, eventually turns out to lead to a better or more efficient solution overall. This can lead to some interesting situations though.. DeepMind’s Alpha Zero, a chess program that learnt by just being told the rules and playing games against itself managed to reach the level of play of Stockfish 8 (a chess playing program that is consistently ranked near the top) in just 4 hours of training, and beat it in a 100 game tournament (28 wins, 72 draws) in 9 hours. Normally these chess playing programs would analyse games that had already been played by other people or other programs during it learning phase, Alpha Zero however only played games against itself to learn. Throughout these games Alpha Zero seemed to play different to other AIs; where you might expect, due to the reward system in reinforcement learning, that it would highly value taking pieces and minimising losses to eventually win, it unexpectedly made large sacrifices of valuable pieces to instead gain positional advantages that lead to victory in the long term. Alpha Zero also utilised neural networks to come out on top too, something that is a core aspect of the

next subject.

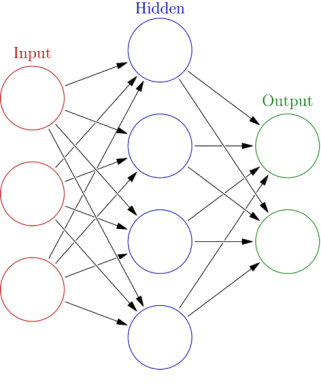

Deep learning uses neural networks in a way that models the human brain to process data it is given. Deep learning uses supervised, semi-supervised or unsupervised learning, and it can also use reinforcement learning. In a multilayer perceptron model, the artificial neurons in a neural network are connected to each other in layers, with a minimum of three. At the minimum an input and output layer are needed, as well as a hidden layer in the middle, every neuron in the input layer are connected to all of the neurons in the middle layer, which then connect to every neuron in the output layer. Each of these neurons has a weight assigned to it that adjusts the information it has been sent and decides where to send it or if even to send it on at all, these weightings are adjusted throughout the training phase to hone in on the optimal final state. It is this sandwich of hidden neuron layers that holds the model which assigns outputs to inputs. There are other types of ways neural networks can be implemented as well such as convolutional neural networks and recursive neural networks, and needless to say there is a lot of interest in the field of deep learning at the moment. Some of this interest is actually from neuroscientists who are observing how neural networks arrive at the conclusions they do as an insight to how the process might work with biological neurons. Due to the presence of hidden layers in these neural networks though, there are times when an AI can arrive at a result without anyone understanding why it did, or what sort of path it followed to get there, which raises some ethical questions. For instance, if you had an AI judge that sentenced someone as guilty without there being a clear reason how it reached its conclusion, it would far from instil trust and could leave potential for undectectable abuse if someone were able to force it to provide a fake answer. Another situation which may have more relevance at this time is the decision-making process in a self-driving car during an emergency. If the car was faced with its own version of the trolley problem or something similar, involving having to decide between the safety of different people, how could we come to the conclusion that the decision the car had made was fair and reasonable? Along this path there have been AIs developed to try and understand the actions of other AIs, in a bid to try and keep the ethical problems under control. Though this methodology raises obvious questions of circularity. ..

This was a very brief introduction into some of the main types of machine learning. There is a lot more to say about these methods and many others, especially on the subject of neural networks, but these will have to be explored more at a later time. Artificial intelligence as a field will only continue to grow with new and interesting developments being abundant, and with AI creeping its way into many parts of our lives it will be worthwhile to keep up with its evolution, wherever it leads.

A good post. I enjoyed the specifics.

LikeLiked by 1 person

Thank you! More, please. 🙂

LikeLiked by 1 person

Very simple and clear introduction. A lot of people, like me, have heard these terms before but can’t find a source to explain everything like this. Thank you!

LikeLiked by 1 person

Great post. Two obsessions are use of big data sets, and the desire to make AI human-like.

For pattern recognition such as spotting disease, machine learning is great, for dealing with complex changing systems, the current machine learning approach fails, due to complexity.

There is no practical value to making AI human-like, as to be human is to embrace negative traits such as hate and lust. Nobody in their right mind would create an AI that could be hateful or lustful, so these traits will be absent and thus an AI will be less human-like without these traits. Also, by trying to replicate the human in AI, a rich diversity of ways to create AI is lost, because we could have tree-like AI, something based upon an octopus, and all other rich templates that nature offers us to replicate. AI of the future may not be what we now think it will become, I think we might currently be on a dead-end path, but we might spin off into paths we never thought of by looking at AI again.

LikeLiked by 3 people

Interesting post on AI! Hopefully AI can lead to a better life for humanity in general, not just the rich capitalist bosses.

LikeLiked by 2 people